8. July 2025 By Sebastian Lang and Christian Wagner

Snowflake Openflow: Next-generation data integration

With the introduction of Snowflake Openflow, based on Apache NiFi, Snowflake is expanding its platform with a powerful data integration service. As an Elite Partner, adesso has been selected to exclusively test and evaluate this innovative product in advance.

What is Snowflake Openflow?

Snowflake Openflow is a cloud-based data integration solution that is fully integrated into the Snowflake platform. It is based on the popular open source software Apache NiFi, which is known for its ease of use in complex data integration. It enables both structured and unstructured data such as documents, audio or sensor data to be integrated in near real time. This enables companies to efficiently and securely orchestrate, transform and manage data streams.

Technical background: Apache NiFi meets Snowflake

Apache NiFi was originally developed by the NSA and specialises in data flow automation. Great attention was paid to the security and traceability of the processed data. The software is characterised by its user-friendly drag-and-drop interface and its ability to monitor and manage data flows in real time. Apache NiFi supports numerous connectors and processors that enable the integration of a wide variety of data sources and targets.

Snowflake Openflow leverages these advantages and integrates them seamlessly into the existing Snowflakes infrastructure. Openflow offers predefined connectors for Microsoft Dataverse, Kafka, Google Drive, Slack, Oracle, Jira, MySQL and many more, greatly simplifying data flow. The connectors follow strict design principles to ensure performance, fault tolerance and easy configurability. Thanks to this out-of-the-box security and maintainability, Openflow is enterprise-ready and also supports governance and compliance requirements through transparent traceability and data sovereignty.

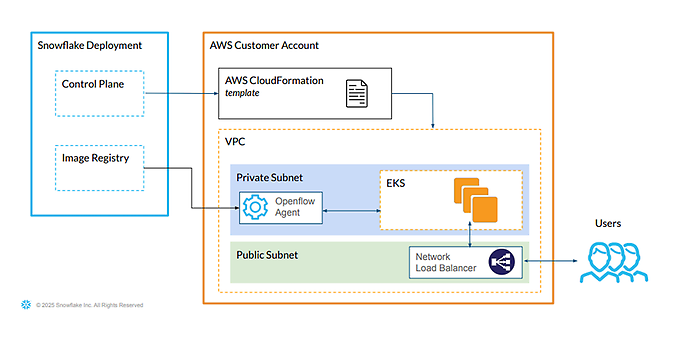

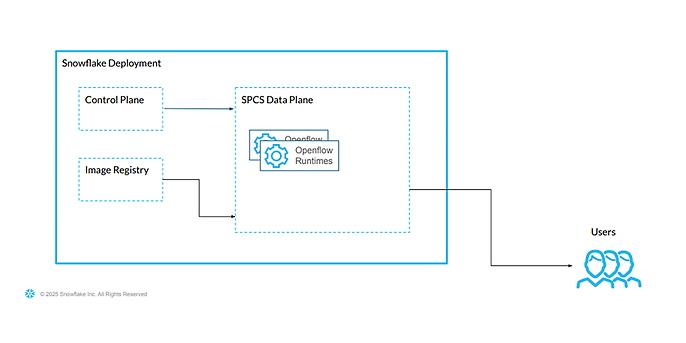

The high-level architecture models

Openflow can be operated either in your own cloud environment (BYOC, ‘bring your own cloud’), currently on AWS, or soon directly via the Snowpark Container Service (SPCS). In both cases, the control plane, i.e. the control and management area for the Openflow pipelines, is located within Snowflake. The data plane, the engine room, is located in the respective cloud account for the BYOC variant and in the own Snowflake account for implementation via SPCS. This results in different cost structures.

Openflow High Level Architecture (BYOC)

Openflow High Level Architecture (SPCS)

Both options offer different advantages:

- BYOC enables companies to run Openflow entirely within their own AWS infrastructure. A CloudFormation template is provided to set up an environment with an Openflow agent (EC2) in a private subnet and an EKS cluster for flow execution. Communication with Snowflake takes place via the control plane and the image repository. Users access the system via a network load balancer. This gives companies full control over resources, network and security.

- SPCS, on the other hand, is a fully managed variant that runs directly within the Snowflake platform. The Openflow runtimes are provided in the SPCS Data Plane without the need for your own cloud resources. This makes Openflow particularly quick to deploy, low-maintenance and ideal for companies that prefer a fully integrated solution.

adesso supports Snowflake in the launch of Snowflake Openflow

As a Snowflake Elite Partner, adesso actively supports the launch of Snowflake Openflow, the new platform solution for modern, cloud-based data integration. In an exclusive partnership, adesso is testing and evaluating Openflow ahead of its official market launch with the aim of helping companies build scalable, secure and AI-enabled data pipelines.

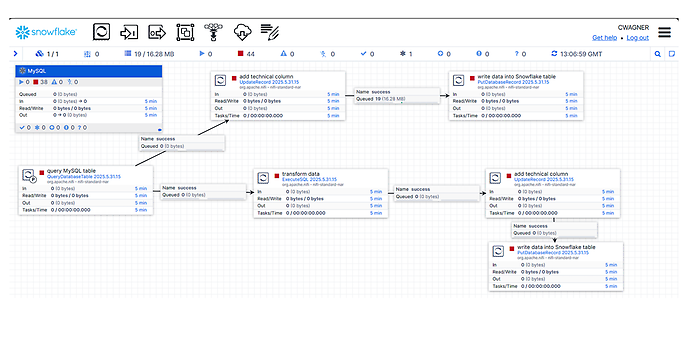

Openflow in action: Use case – data migration from MySQL to Snowflake

The following practical example illustrates how Openflow orchestrates data flows and can perform transformations during the import process. This use case deals with the migration of bank data from a MySQL database to Snowflake and the further processing of this data. On the one hand, the original table must be successfully and completely transferred from the source environment (in this case, MySQL) to the target environment (in this case, Snowflake) – while simultaneously enriching the data with new technical information. Second, the data from the original MySQL table is to be used to create a new data product within Snowflake in a parallel processing step through transformations.

The individual data processing steps are described below.

Canvas of the use case

First, the table containing the bank details is queried from an external MySQL database. By default, Openflow converts the data into FlowFiles in Avro format.

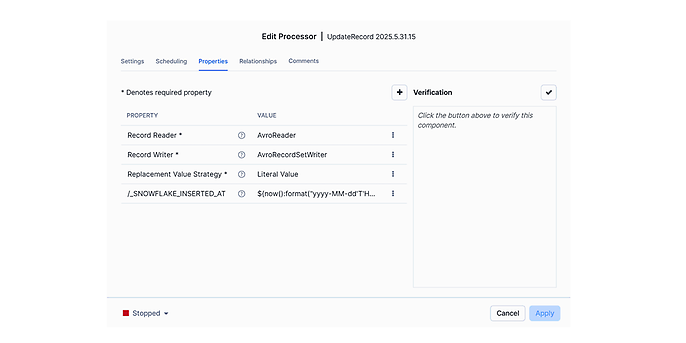

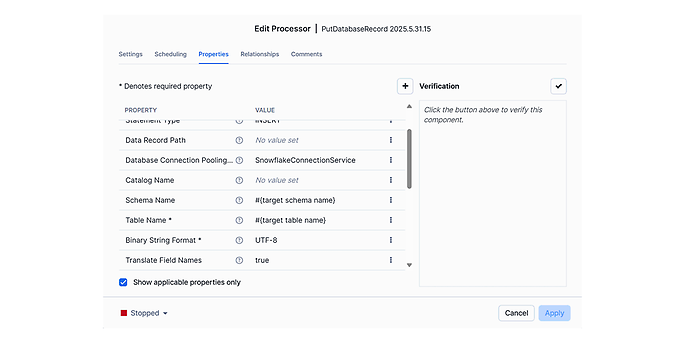

In the upper flow, the extracted data is now extended by a technical column (_SNOWFLAKE_INSERTED_AT) so that it can then be written to the target table in Snowflake.

Processor UpdateRecord

Processor PutDatabaseRecord

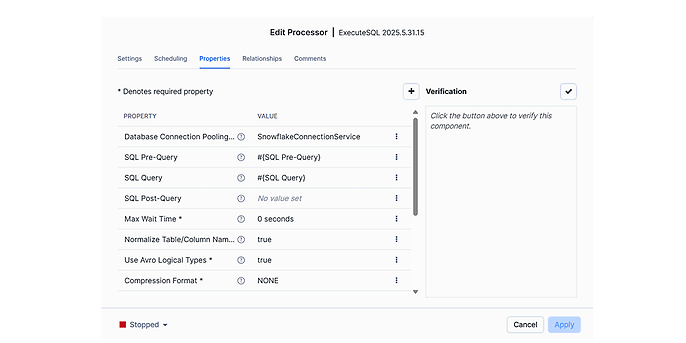

At the same time, the same data is transformed in a parallel flow (in the canvas diagram below) and also supplemented with a technical column. Finally, the data is written to a new table to obtain the new data product. The Processor ExecuteSQL not only performs the data transformation, but also checks whether the required table exists before executing the transformation. If not, it is recreated.

Processor ExecuteSQL

The illustrations of the Processor UpdateRecord and PutDatabaseRecord are omitted here, as they are similar to the first flow described.

The connections between the respective processors represent connections that distribute the data flows depending on their status (‘success’, ‘failure’). Within these connections, the FlowFiles can be displayed both before and after processing, making the respective processing steps transparent and traceable.

Multiple processors with their connectors can be combined into individual processor groups. In this way, higher-level control options can be implemented, for example.

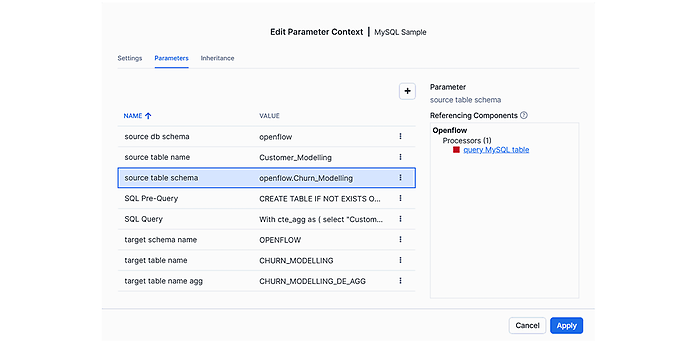

The illustrations show that parameterisation plays an important role in Openflow. In the corresponding parameter context, necessary adjustments can be made centrally and conveniently, and the referenced processors can be identified.

Parameter Context

Successfully master data integration in the AI era with adesso

As a Snowflake Elite Service Partner, we not only bring over 100 experts with in-depth industry and technology expertise to the table, but also accelerate complex ETL/ELT processes and governance projects – faster, more cost-efficiently and with greater data security.

For businesses, this means:

- Faster insights thanks to high-quality data that can be used immediately

- Reduced workload for data teams thanks to automated, scalable data pipelines

- Future-proof AI projects with robust security and compliance standards

With adesso and Snowflake Openflow, data integration in the AI era is not only more efficient – it is groundbreaking.

Conclusion

Snowflake Openflow is a powerful extension of the Snowflake platform. Companies benefit from the user-friendly handling of Apache NiFi and the seamless and fully managed integration into their existing Snowflake environment. This makes it possible to implement initial ETL routes quickly and with little effort. At the same time, users can dive deep into technical details and control options as needed. The resulting costs are manageable and not excessive. This positions Openflow as a central component for efficient, scalable and reliable ETL processes.

We support you!

Would you like to modernise your data integration and exploit its full potential? We accompany you from architecture consulting to implementation – with in-depth expertise, tried-and-tested solutions and a strong team. Contact us and we will show you how to make your data integration scalable, secure and future-proof.