5. December 2025 By Mahlyar Ahmadsay and David Wedman

Implementation of Snowflake Intelligence

Snowflake Intelligence is now available for all accounts and is changing the way companies access their data and use AI. As an Elite and Launch Partner, adesso has extensively tested Snowflake Intelligence at an early stage to support customers on their journey towards data democratisation and the introduction of AI solutions.

This blog post does not deal with what Snowflake Intelligence is, as we have already covered this topic in detail in our previous blog post, ‘Snowflake Intelligence: Just ask your data’. In this blog post, we show how Snowflake Intelligence can be implemented in existing data and AI landscapes and provide a brief overview of the most important components required for setup.

Overview

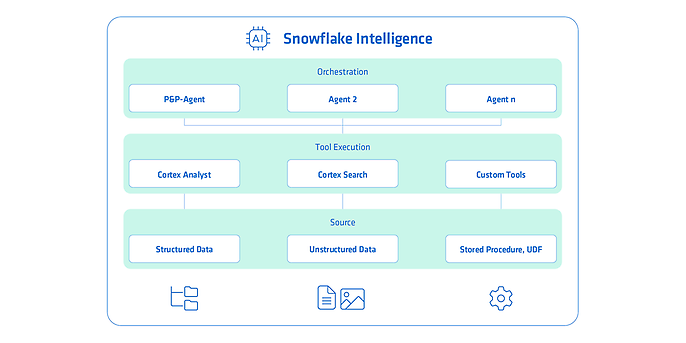

Figure 1: Snowflake Intelligence overview, source: own representation

Snowflake Intelligence is based on various data sources, which are divided into three categories: structured data (such as tables and views), unstructured data (e.g. documentation, contract data and guidelines in the form of text documents, PDFs or images) and technical components such as stored procedures and user-defined functions (UDFs).

These sources are used by various services and tools. These include the Snowflake Cortex services ‘Cortex Analyst’ and ‘Cortex Search’ as well as individually developed tools. The entire data processing is orchestrated by specialised agents (e.g. P&P Agent, Agent 2 or Agent n), which control the execution and coordination of the tools.

Data Driven

From data chaos to a data-driven company

Data is the engine of digital transformation. But without structure, strategy and meaningful networking, it remains untapped potential. With a clear data strategy and modern architecture, we transform your data masses into real added value – for better decisions, automated processes and sustainable innovation.

Use case and data basis

In our scenario, we accompany a technology group that relies on rare earths for the production of electronic components. With the help of Snowflake Intelligence, employees should be able to gain in-depth insights into the company's structured and unstructured data by asking simple questions, without first having to create complex analyses or dashboards.

The following data should serve as the basis for the agent:

Structured data

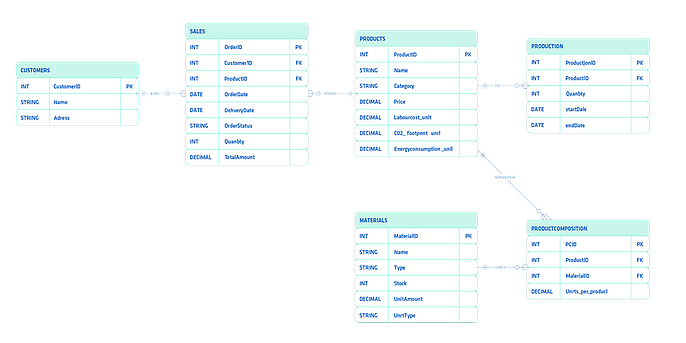

Figure 2: Entity-relationship diagram of structured data, source: own representation

Our structured data is based on product, customer and sales tables, as well as production, material and product composition tables.

Unstructured data

The unstructured data consists of PDF-based reports on environmental and sustainability requirements, which contain regulatory requirements that the company must comply with.

Implementation

To build an AI agent, we first need to provide it with the necessary services that it can access. For the structured data, we set up a Cortex Analyst and provide it with the data as a basis. We then use the Cortex Search Service to give the agent access to the unstructured data. In our case, this is the reports on environmental and sustainability requirements.

Creating the Cortex Analyst

To create a Cortex Analyst in Snowflake, you first start by creating the actual Cortex Analyst object in the Snowflake environment. You then select which data sources the analyst should access, typically tables or views within a schema. In our case, these are the tables mentioned in the ‘Structured Data’ section (sales, customers, materials, etc.).

The next step is to create a semantic view for the Cortex Analyst. This serves as a semantic layer over the data and defines how the analyst can interpret the data. It is also possible to manually maintain relationships between tables if they are not automatically recognised.

Once all data sources are connected and the semantic layer is fully defined, the Cortex Analyst is ready for use. From this point on, questions can be asked in natural language, which the analyst answers based on the linked data.

Creating the Search Service

To create a Cortex Search Service in Snowflake, you start by selecting the relevant documents that you want to search later (reports on environmental and sustainability requirements).

These are first uploaded to a stage. The documents, for example PDF files, are then written to a table using the PARSE_DOCUMENT() function, which extracts the content in a structured manner.

The document texts are then split, typically based on headings or logical sections. This step is known as chunking, which is necessary to break down long documents into smaller, semantically meaningful units. This enables the search service to deliver more accurate search results and work more efficiently.

Once the data has been prepared, the Search Service is created, which accesses the tables containing the PDF chunks. Finally, the Target Lag parameter is used to configure the time frame in which the service applies changes to the underlying data sources. Depending on the selected setting, the service updates its index data regularly so that search queries deliver the most up-to-date results possible.

Creating the Snowflake Intelligence Agent

To create a Snowflake Intelligence Agent based on an existing Cortex Analyst and Search Service, the agent is first created in Snowflake. The database, schema and name of the agent are specified.

The agent is then configured via four tabs:

- About: Here we define information that the end user will see later, such as name, function description and sample queries.

- Tools: Here we specify which services the agent is allowed to access, such as the Cortex Analyst, the Search Service or our own custom tools. These provide functions and procedures that the agent can execute. A description is stored for each service added so that the agent understands its function and can access it correctly. This allows the agent's behaviour to be controlled in a targeted manner and ensures that queries are processed sensibly.

- Orchestration: In this tab, we select the model to be used. For example, GPT-5 or Claude Haiku 4.5. Instructions can also be stored here to control how the agent thinks through tasks, selects tools and executes actions in the correct order. In addition, budgets, time limits and token limits can be defined.

- Access: Here we define which roles are allowed to access the agent and to what extent.

This provides an agent that processes structured and unstructured data sources, interprets them semantically and answers context-related queries based on the accessible data.

Test question and result

To test the agent we created, we asked it the following question:

‘Have we achieved the CO₂ reduction target for 2024 compared to the 2023 reference? To what extent was the target exceeded or not met?’

The answer is shown in the following video:

We receive both a graphical answer to our question and a detailed explanation. Snowflake Cortex Intelligence provides deep insights into the graphics and answers generated by the AI.

Let's go through the process from the beginning:

- The agent guides us step by step through its thought processes. It independently decides which data sources to access and which services to use based on the information we provide. In doing so, it interprets and understands both structured and unstructured data.

- For structured data, Cortex Analyst automatically generates the appropriate SQL and executes it. Unstructured data is analysed using the Cortex Search Service, which utilises vector search for this purpose

- From the generated SQL, we can always understand the logic the agent is applying. Once the query results are available, the agent evaluates them and presents us with the final answer, supplemented by a suitable graphical representation.

The agent not only provides us with results, but also reveals its entire solution path. This keeps its approach completely transparent and easy to understand.

Other possibilities offered by Snowflake Intelligence

In addition to the functionalities already described, Snowflake Intelligence agents can obtain data via the semantic model from the Snowflake Marketplace. They can also interpret and use dashboards and KPIs from Tableau and read information from partner sources such as Salesforce. Furthermore, Snowflake Intelligence agents are able to execute both functions and procedures, which allows them to send emails automatically, for example.

Conclusion

The implementation of Snowflake Intelligence shows how AI-native functions can be used directly within the data platform without the need for additional infrastructure or complex integration paths. The combination of Cortex Analyst for structured data and Cortex Search Service for unstructured documents creates a central agent that understands data sources, interprets them semantically and makes them accessible in natural language. This enables employees without SQL knowledge or analysis tools to answer informed questions and make data-driven decisions.

Try Snowflake Intelligence for yourself with your own data. Initial small experiments quickly show how much potential the new features offer.

We support you!

Experience how Snowflake Intelligence revolutionises your data analysis. Our experts support you in using Snowflake Intelligence – for faster insights, informed decisions and true data democracy.