8. July 2021 By Veronika Demykina

Using AI to help your attention span during video conferences – an opportunity and risk assessment

Covid-19 has held the world in its vice-like grip for over a year now. But crises in themselves are the perfect foil for inspiring new ideas and providing the motivation needed to rethink existing processes, explore new possibilities and their limits, to evaluate them and to use the insights gained from them in a profitable way in the future. The same is true of using video conferencing, which has become a constant companion in the working world since the beginning of the pandemic.

Advantages and challenges

Many agree that video conferences will continue to play their part even after the coronavirus pandemic has passed. The advantages they offer are obvious – not only is the company’s travel budget spared the travel expenses (and accommodation costs if a stay at a hotel would’ve been involved), but the employees’ time is saved, too. This could also contribute to protecting the climate as removing business trips from the equation could significantly reduce rail, car and air traffic in particular.

However, using video conferencing also brings its own set of challenges with it, such as people feeling overworked, suffering from fatigue and being easily distracted. The lack of short coffee breaks or even just walking to the next conference room increases the workload. People are expected to give each individual online meeting their full attention and concentration despite the ever-changing carousel of topics and participants and the fact they’re scheduled back to back. As if that weren’t enough, only seeing the other participants on video severely limits our ability to gauge their mood and attitude, which can increase feelings of insecurity. Looking for signals of approval or disapproval, combined with the increased workload, costs us a lot of energy. This results in the spotlight falling onto a key yet sadly finite resource: our attention span.

What can we do?

However, this realisation, along with the growing amount of data traffic caused by video calls and its foreseeable further use, could also be used to optimise virtual collaboration. If driver assistance systems can alert us that we are getting tired and need a break, why shouldn’t video conferencing do the same? If we were to take it a step further, the speaker could receive an anonymous notification informing them that it might be time for a palate cleanser activity or a break as the audience no longer seems focused or too distracted.

One idea about how to implement this could be to develop a system that uses video data to identify signs of inattentiveness based on head position and facial and eye features. In the best case, the system would be able to intervene immediately and help the listeners in the conference to self-regulate.

Deep dive: self-regulation

Self-regulation can be described as a way to control your actions. Self-regulation strategies depend on how determined a person is. This is because this is a largely unconscious form of determination that results primarily from self-observation, self-evaluation (based on the goals you have set for yourself) and self-reaction to extrinsic and intrinsic stimuli. However, if we run into internal motivational barriers that prevent us from taking action, our volitional system comes into play. This system, which operates parallel to motivation, describes the overcoming of internal barriers and motivational barriers that prevent us from taking action. It correlates with directing your attention in a controlled manner, which is why it feels like it takes a lot of effort. It suppresses the urge to engage in attention-breaking behaviours and avoids distractions. In the long term, this can lead to damaging your emotional well-being, discontentment and cognitive blocks. There are various strategies to counter this, which include self-observation and self-remembering.

Using machine learning, the system could predict the participants’ attention span and suggest recommendations to improve it through a variety of small exercises (support for self-observation), for instance. In this context, appropriate and immediate information about the participants’ inattentiveness could be displayed as a pop-up message (support for self-remembering). Deep learning and new feedback data for the system, that is, whether it was right or wrong, should improve the probability of a hit. At the end of an online conference, a summary of the results could also be used as another source of data to help optimise virtual cooperation – both for the participants and for the speaker.

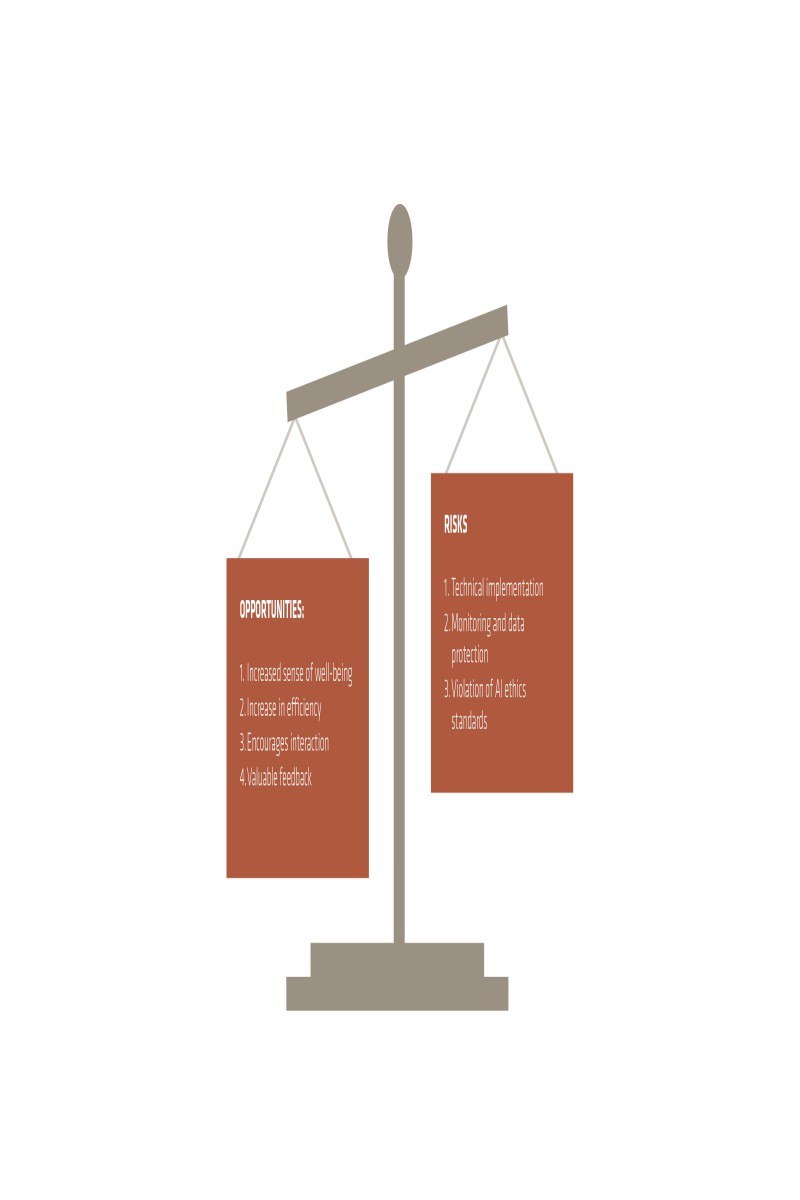

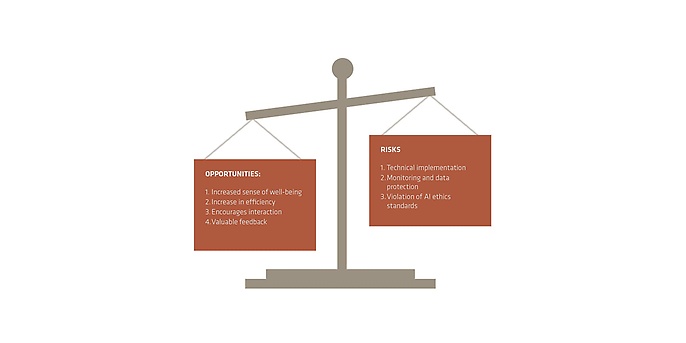

In simple terms – what opportunities and risks does this idea create in combination with AI/ML?

It’s tough to imagine life without AI nowadays. It’s a part of so many situations in peoples’ everyday lives and has a significant influence on how we humans work, communicate, make decisions and overcome the obstacles we face each day. AI services mean that companies require less AI expertise – which is already in short supply – to implement AI projects. These services, such as attention detection, can be connected via APIs and used in a beneficial manner. This way, companies would automatically have access to all of the updates and improved processes that are integrated into the solution.

Using AI in video conferences opens up the following opportunities:

Increased sense of well-being:

Using AI in video conferences leads to an improved balance between workload and breaks, as the participants’ attention span is analysed for this specific purpose. This can lead to a higher degree of psychological well-being at work or in general in the long term by reducing workload.

Increase in efficiency thanks to an increased attention span:

The attention of the listeners can be greatly increased by actively managing the balance between workload and breaks during online conferences and meetings. This inevitably leads to video conferences becoming more efficient as more content can be absorbed and processed, increasing the productivity and performance of each individual.

Encourages interaction:

Speakers often lapse into a monologue during their presentations. They fail to notice the fact that the participants are zoning out or focusing on other work at the same time such as answering e-mails. At this point, the AI should be able to send the speakers a pop-up message early on to inform them about the low level of attention. This enables the speaker to make their presentation more interactive by including exercises or asking questions to specific people, for instance. Adding an interactive element at the opportune moment would make the participants more engaged. The participants could also be informed about their low level of attention, too. This would make them refocus on the online meeting, potentially leading to more in-depth discussions between the participants and the speakers in the long run.

Valuable feedback:

At the end of an online conference or meeting, a summary of how people’s level of attention changed throughout the event could help both the listeners and the presenter in optimising the virtual collaboration. In this way, both sides receive valuable feedback, which would contribute to improving self-perception and regulation.

Opportunities: Practical example

Microsoft plans to measure well-being using biometric data to support ongoing work in MS Office applications. If the system judges the workload to be too high, the algorithm should point this out and provide suggestions on how the stress level could be reduced.

Innovative technologies such as artificial intelligence often lead to a large number of advantages and opportunities that improve everyday life. Using a system as discussed above offers promising possibilities, but other issues need to be factored in.

The following risks were identified if this idea were to be combined with AI:

Technical implementation:

Numerous internal and external factors must be taken into account when implementing the system. Among other things, there is the real-time processing of the data, the performance of which depends on a number of different parameters. In the long term, the system will not only measure attention on the basis of video data, but also incorporate various other data from input devices – such as the keyboard, mouse or microphone – into the system and its attention detection. In the case of the microphone, background noise from the participants can be analysed. A noisy environment, for example, has been shown to cause concentration difficulties and reduced work performance. For that reason, a high noise level could be included in the classification as an influencing variable. The problem here is that the presenter has no influence on the noise level in the environment of other participants, so although they receive a warning from the system, they can’t prevent their level of attention from dropping.

Monitoring and data protection:

Since the situation with the coronavirus has meant the majority of users are recording themselves in the privacy of their own homes, a great deal of focus also needs to be placed on monitoring and data protection. The data collected often contains location data or even personal information, such as behaviour during the conference. This makes it possible to create personal profiles, which is why the information used is classified as sensitive data. In the worst case, this type of system could also be used as a way of monitoring the participants and misused for automated performance control. That’s why data protection must have top priority so that the information obtained cannot be misused. In the best case, the system is developed in a way that avoids collecting, storing and using personal information and the data is only available to the entire online conference in anonymous form simultaneously.

Violation of AI ethics standards:

The system is designed to use machine learning algorithms to analyse large amounts of data and identify patterns that track the level of attention of the audience during an online conference. Based on these patterns, the system learns and makes decisions, for example, as to whether to warn the speaker that the listeners’ attention is declining. But this is precisely where the problem lies. The aspect of biased data sets and algorithms is often forgotten when evaluating large quantities of data, which can lead to unfair systems. The aim must be that the system does not disadvantage or discriminate against anyone in terms of equality and fairness.

User acceptance: All the risks mentioned above have a significant influence on the acceptance of using the system. General fears among the population regarding artificial intelligence could also negatively influence user acceptance. That’s why it’s important to transparently show (potential) users the purpose the data is being used for and what the data lifecycle looks like. The emphasis should be on the personal benefits of using the technology and the fact that it is not used for surveillance, for instance.

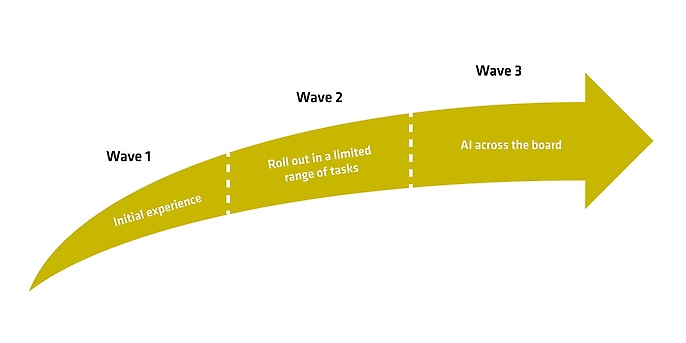

We don’t believe this type of AI-supported system can be rolled out overnight. This is primarily because there might or would be resistance to it since the system is designed to measure people’s cognitive characteristics in their working environment. The only way for people to realise the benefits and potentially reconsider their criticism is to use this technology first-hand. A three-stage model is the best way to roll out a system like this:

We want to hear from you!

What do you think of the idea of artificial intelligence being used to help improve attention span in video conferences? What other issues do you think should be considered when introducing a system like this? Which algorithms do you think would lend themselves to a fast but also accurate prediction of someone’s attention level? Please feel free to get in touch with me, I’d love to hear your views.

If you would like to learn more about exciting topics from the world of adesso, then check out our latest blog posts.