11. July 2023 By Yelle Lieder

Sustainable AI – developing and operating artificial intelligence in an environmentally sustainable way

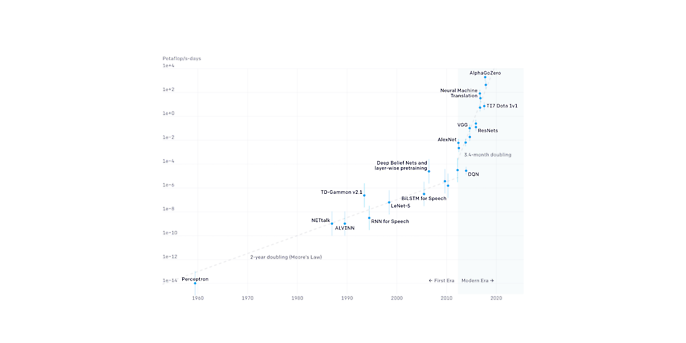

Developers and users of artificial intelligence (AI) consume electricity and indirectly emit greenhouse gases. Manufacturing, transporting and disposing of the hardware required for this must also be taken into account in the life cycle assessment of the AI systems. Not only does it have a negative impact on the life cycle assessment due to the amount of energy it consumes, but also due to the rare earths that need to be mined, the volume of water consumed and the waste created when disposed of. Of course, these problems are not specific to AI. However, AI differs from traditional application software in that it requires more computing power and thus also consumes more electricity and needs more hardware. The graph below gives you an idea of the development of computing power used for AI, which was made possible by the increased availability of computing power, higher hardware efficiency, under-utilisation of cloud data centres and thus lower costs. Even if researchers assume that this trend will not continue at this pace, the environmental impact of AI has still already become a challenge that needs to be addressed.

This blog post will show how the environmental impact of AI can be minimised and why training AI is often not as environmentally damaging as you might think. I will only be focussing on aspects that specifically concern AI. Take a look at this blog post to discover general tips on developing sustainable software.

The computing power required to train different AI models. Source: openai.com/research/ai-and-compute

Data

Data must be captured, loaded, cleansed, labelled and tokenised. This takes computing power, as well as bandwidth for transmission and physical storage. In addition to the traditional best practices such as aggregation, compression and filtering, you should also pay attention to the three aspects listed below when processing data in a manner that uses resources efficiently:

- Data quality: Low data quality leads to more experiments, longer training times and poorer results. That is why you should try to use curated datasets that represent the problem as accurately as possible so that you use less computing power.

- Data volume: Working with high-quality data can significantly reduce the amount of data that needs to be processed, transmitted and stored – and thus limit the volume of resources required to do it.

- Data formats: The Green Software Foundation, for example, recommends using the Parquet format as the common data format instead of csv. This format can be processed in Python, meaning it requires significantly less energy.

Experiments

The development phase of AI systems can consume significantly more resources than traditional application systems. Even if data engineering and training the models are excluded from the development phase, a large volume of resources is spent on fine tuning until the final training configuration is found. In this phase, different hyperparameters and features are repeatedly iterated over parts of the data in order to get closer working solution. Some aspects that need to be considered here are:

- ‘Data centricity over model centricity’: The model that fits the data and the problem should be chosen during development. Too often, we see models chosen that have always been worth trying out or that are currently the subject of particularly heated discussion. This results in resources being consumed for experiments with complex models, although the problem can probably also be solved with simpler models.

- Programming language: Our blog post about sustainable programming languages already made it clear that the pure runtime behaviour of a language is not the be-all and end-all. Since the runtime aspect has more of an influence in AI, but common Python libraries are based on low-level languages, it is worth pointing out here that there are good reasons why Python, R, C and C++ have established themselves as standards.

- Occam’s razor: Not every problem has to be solved with AI. Problems can often already be solved sufficiently using simple statistical methods or one-dimensional data series.

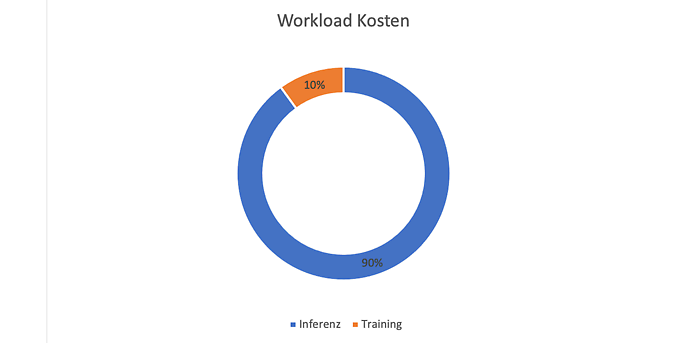

Training

Training often accounts for just ten per cent of the workload consumed over the life cycle of an AI model. Although the individual training process is very energy-intensive, it is rare for a model to actually undergo training. More complex AI processes such as neural networks in particular see the amount of energy consumed increase exponentially with the number of parameters in the model. Having twice the number of parameters leads to more than double the resource consumption due to the complex networking and iterative training. Here are some tips for sustainable training:

- Training efficiency: It goes without saying that the models’ behaviour during training should be factored in when making a decision on which model to choose, even if ‘just’ ten per cent of the emissions are generated by the training process. Plenty of research has been done on the efficiency of models and benchmarks provide a good starting point for evaluating use cases.

- GPU-optimised solutions: Training on graphics cards can significantly reduce the amount of energy consumed per calculation compared to training in RAM.

- Transfer learning: Reusing pre-trained models means the computing power initially invested can also be ‘reused’ in later models. As a result, the energy costs for training the foundation model are amortised over several use cases.

- Re-training: Once they have been trained, models do not produce meaningful results forever. If you are going to re-train a model, be careful not to simply train it using every scrap of new data available just because it is there. Before each re-training cycle, check how the performance of the trained models develops over time and whether re-training makes sense.

- Memory-optimised models: Fully trained models can take up a lot of memory. Bits and bytes on a server always require the corresponding space on physical storage media, even if they are in the cloud. By using quantisation – converting continuous values into discrete values – for example, the size of many models can be reduced, thereby reducing the physical hardware required for storage.

Average life-cycle workload cost of a deep learning model. Source: ncbi.nlm.nih.gov/pmc/articles/PMC9118914/

Inference

Inference, as the calculation of a result from a model, accounts for about 90 per cent of the workload over the life cycle of many models. Although a single inference only consumes small amounts of energy compared to training, the sheer volume of inferences performed on an average model means that the efficiency for sustainability is often higher in the area of inference than in the actual training. Some measures to counteract this include:

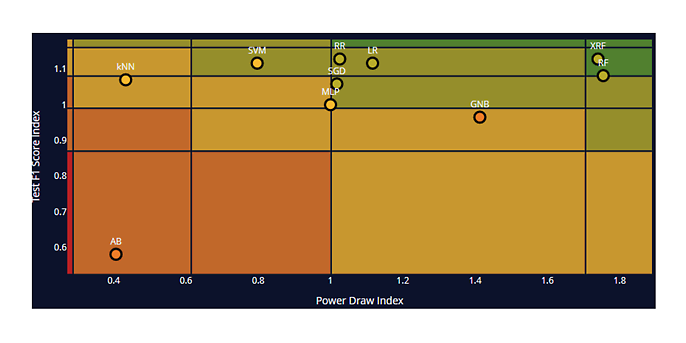

- Inference-optimised models: The problem of inference is a well-known one and there are public benchmarks such as ELEx – shown in the figure below – that you can use as a reliable guide to find out which models to look at first in terms of efficient inference. When looking at the relationship between the performance of the models and their energy consumption, it becomes clear that some models deliver comparable performance with significantly different energy consumption.

- Edge computing: Moving the inference or sometimes even the training process to the edge – in other words, closer to end devices – allows you to combine two advantages at once: There is less strain on the transmission networks, which reduces the amount of energy consumed for data transmission, and the end devices’ processors are often not even close to being fully utilised. Additional utilisation through some inference only increases power consumption to a small extent due to the non-linear correlation of utilisation to power consumption. It also requires less physical hardware in the data centres.

- Caching: Expected or particularly frequent requests for inferences can be stored temporarily. This way, actual inference does not have to be performed each time for similar queries. In this case, retrieving a response that has already been saved is often sufficient.

Benchmark on performance and energy consumption of AI model inference. Source: ELEx – AI Energy Label Exploration 2023

Looking to the future

Admittedly, only a handful of these measures are anything groundbreaking. Most of them are simply part of every data scientist’s toolkit. However, in addition to all the measures we have discussed to make AI more sustainable, transparency is presumably the most important aspect. Consumers often have no real idea about how much physical infrastructure is needed to provide virtual services and the amount of resources it consumes. This means that anyone offering sustainable AI also needs to be transparent about their resource consumption – both for users, to encourage them to adopt sustainable behaviours and make sustainable choices, and for developers and decision-makers. You can only perform an environmental cost-benefit assessment if you know how much a system actually costs. Moreover, it must be clear that sustainability cannot be the only decision-making criterion when selecting technologies. Rather, sustainability must be considered as one of many criteria in the future.

While this blog post might not read exactly like an ode to AI, there is one key point to take away here: above all, AI offers the potential to further environmental protection and drive us towards achieving the global sustainability goals. Ways this could be done include using smart solutions to accelerate the energy transition, contributing to decarbonisation in the areas of production, mobility, construction and logistics, enabling adaptation in agriculture and forestry and increasing resilience against man-made climate change. While it goes without saying that we also have to get better at not simply throwing AI at every single problem we encounter, we must not forget that it can be a decisive way to increase sustainability if we pay attention to the rules it entails and use it responsibly.

You can find more exciting topics from the adesso world in our blog articles published so far.

Also interesting: