2. June 2025 By Gianluca Colaianni

Moving business data to Flowable with speed and precision

In today's fast-paced business environment, efficient data migration is crucial for maintaining seamless operations and enhancing productivity. This article focuses on the practical application of Quarkus for migrating a significant amount of data and documents from a legacy system to Flowable.

By leveraging Quarkus as the migration tool and Flowable APIs, we successfully transitioned to a modern, scalable and agile platform, fully compliant with the BPMN standard. Our focus was on performance and ensuring that the client's day-to-day business was not affected. Discover the challenges we overcame and the strategies we employed.

Enabling seamless migration for lasting customer value

We recently participated in one of our customer’s strategic initiatives as part of their Citizen Development program. The customer aimed to re-develop a discontinued legacy application using the Flowable platform, utilizing the existing expertise and custom components within the ecosystem. This is a typical scenario in our projects, with a few exceptions:

- 1. The application is publicly accessible

- 2. The legacy system has been online for several years and migrating (part of) the business data as well as the relevant documents was a hard requirement

To ensure a smooth transition, we have developed a comprehensive migration plan that encompasses all necessary activities and measures. This plan aims to protect data integrity, maintain the highest quality standards, and prevent any disruptions to the application's business operations.

Despite the ambitious scope of the project, we encountered a significant constraint in the setup phase: we did not have direct access to the Flowable infrastructure, including the database. Our single mode of communication with Flowable was through the APIs exposed by Flowable Work. This limitation required meticulous planning and innovative solutions to ensure the successful data migration without compromising the integrity or performance of the new system.

Faster delivery, full control for the customer

The architecture for the new solution in Flowable was meticulously planned to leverage Flowable's capabilities for storing business-relevant data. Metadata was systematically stored in Flowable data objects, while documents were managed as Flowable Content Items. This strategic decision ensured that all pertinent information was organized effectively within the Flowable ecosystem, facilitating streamlined access and efficient management. This approach aligns with our Citizen Development program. The organization's IT provides the platform and tools for users to build their own applications using Low-Code methods while reducing the need for external systems or infrastructure.

First of all, we designed the migration plan and established some key performance indicators (KPIs) to evaluate the quality of data migration and implement measures for rollback. We scheduled multiple iterations to facilitate learning and improvement throughout the process.

Our methodology encompassed meticulous testing and validation at each phase. Every iteration involved transferring a subset of data, followed by comprehensive quality checks and performance assessments. This incremental strategy enhanced the process and enabled swift adaptation to arising challenges.

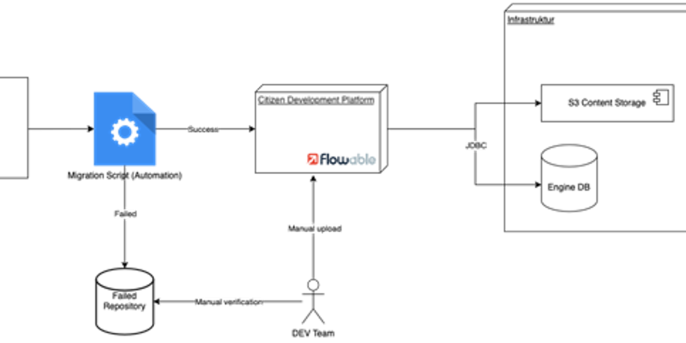

Figure 1.: Migration flow

Our team leveraged Quarkus to build a robust and efficient migration tool. As Quarkus is a well-known Java framework that is famous for its fast startup times and low memory footprint, it has proven to be the ideal choice. The tool could be seamlessly interfaced with Flowable APIs, enabling the secure and accurate data transfer. Particular attention was given to ensuring data consistency and integrity throughout the migration process.

During migration we encountered several challenges, including discrepancies in data formats and structures between the legacy system and Flowable. To address these, we implemented data transformation and mapping strategies, ensuring compatibility and coherence in the migrated data. Additionally, we established a rollback mechanism to swiftly revert to the previous state in the event of any critical issues.

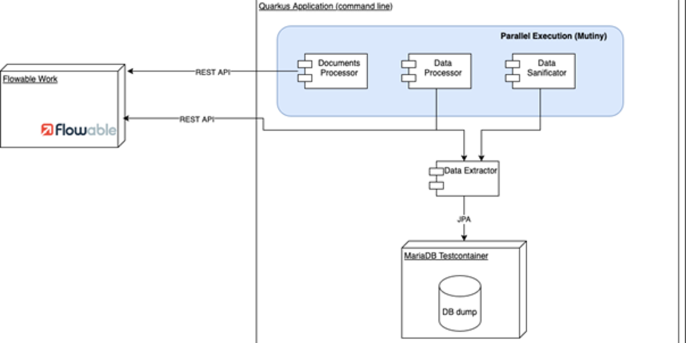

One challenge we encountered was performance. Transferring such a huge amount of data required several hours of execution. To address this, we decided to implement strategies to parallelize the work and expedite the process. We adopted Mutiny to run the parallelism in Quarkus, significantly enhancing the efficiency of our migration tool. This approach allowed us to distribute the workload across multiple threads, reducing the overall migration time and allowing us to easily run different migration trials, ensuring the project stayed on schedule.

Another challenge was related to the capabilities of the execution environment for the migration tool. Those capabilities were constrained due to security protocols imposed by the customer organization. We had access to a docker environment, and we implemented a test container running with Quarkus to facilitate the data extraction from the database dump that came from the legacy system. This setup enabled efficient extraction of the relevant data for migration, utilizing Hibernate and JPA (in the Quarkus flavour “Panache”). Moreover, the lifecycle of the test container has been strongly coupled with the lifecycle of the Java application, ensuring the proper shutdown at the end of the execution. The following diagram visualizes what I just described.

Figure 2.: Migration tool architecture

In addition, we meticulously designed and implemented a sophisticated rollback mechanism to ensure any changes applied during the migration process could be reverted. This mechanism leveraged detailed trace and log files to facilitate thorough cleanup of the environment. This rollback capability proved particularly advantageous for conducting multiple trials of the migration, allowing us to refine and optimize the entire process in a safe way.

Conclusion

Despite the complex nature of the project, we could ensure a smooth and successful data migration process for our customer, thanks to our meticulous planning, combined with our adaptive and iterative approach. With the transition to Flowable, we did not only modernize the customer’s application landscape but also positioned them to leverage the full capabilities of a BPMN-compliant platform, moving forward the customer strategy in the Citizen Development approach. Our data migration strategy has demonstrated to be reliable, scalable and accurate, allowing our customer to iteratively run different data migration executions, verify the quality of the data and have an established behaviour of the data migration process in production.

Feel free to reach out if you need further details. Our Competence Center “Business Automation” can demonstrate extensive experience in data migration projects.