30. November 2021 By Magdalena Stern

Introduction to the relevance of AI ethics

Artificial intelligence changes the perspective. This is the guiding belief of our adesso AI campaign, and at the same time it contains an important aspect that needs to be considered. AI will have a significant impact on our future and we need to take a look at this plus consider and analyse all sides, not only to see the opportunities and possibilities, but also to become aware of the risks that exist so that we can face them and look to the future with confidence.

Specifically, it is about the relevance of the ethical aspects of artificial intelligence – AI ethics for short. Here, I am not referring to the headlines that suggest machines will destroy humanity. Instead, it is about being mindful of the issue and giving it the space it needs. For even if these headlines seem to be exaggerated on the Internet or in book titles, they nevertheless draw attention to a possible danger that we need to be aware of.

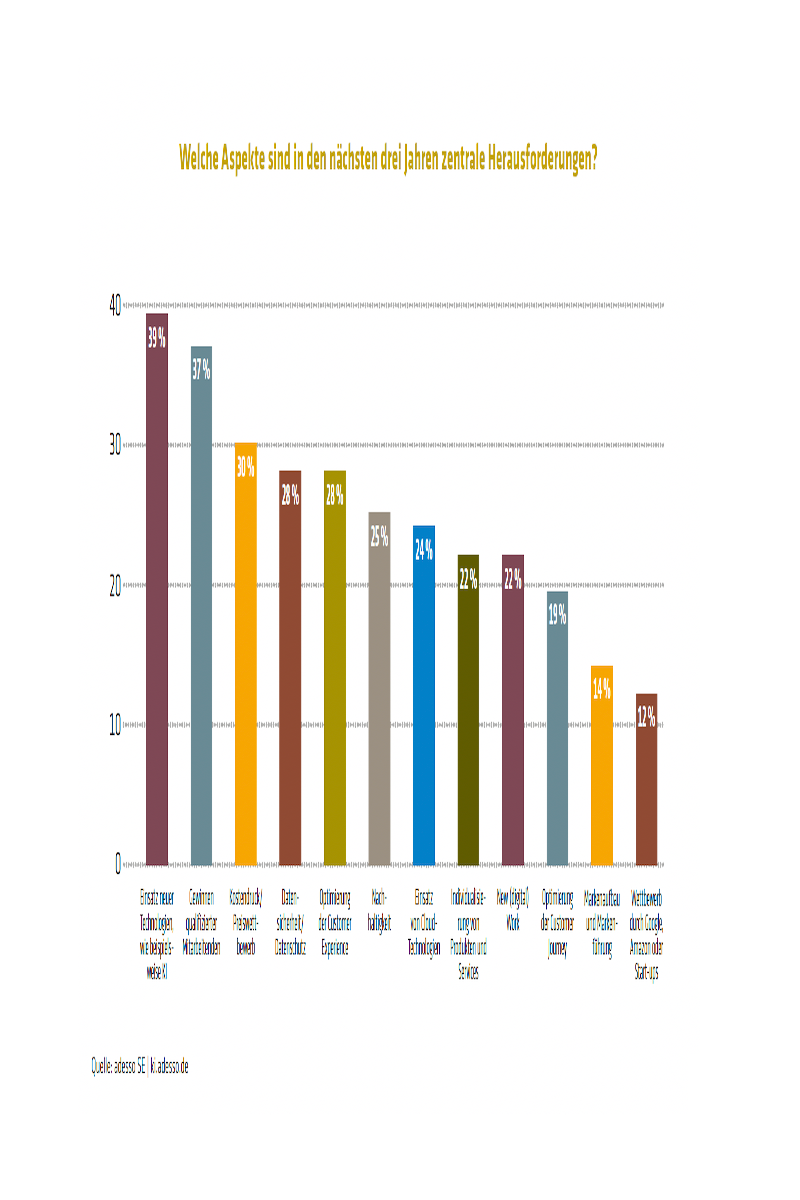

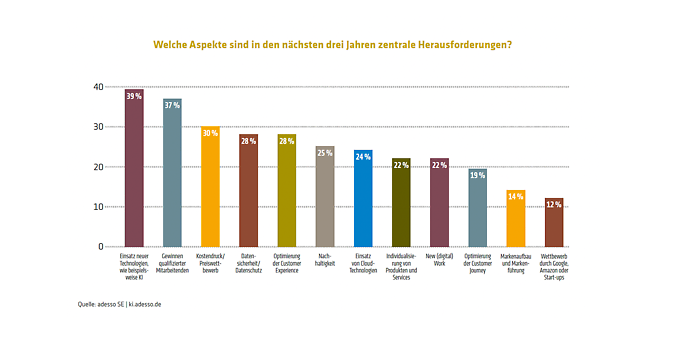

In the adesso AI study ‘AI – taking stock’, 39 per cent of decision-makers, and thus the majority, are convinced that the use of new technologies such as AI will be the key challenge in the next three years. This illustrates the relevance of AI and the need to deal intensively with the issue of AI technologies.

Challenge – What does AI ethics deal with?

To understand what AI ethics is about, we first need to understand how AI works. A short digression: artificial intelligence is the ability of a machine application to mimic natural intelligence. This means that ‘machines’ are put in a position where they can make decisions that correspond to human judgement – and this is where the ethics part begins. Because humans do not only make ethically correct decisions. Humans are characterised by distorted perceptions (‘unconscious bias’) that influence our decisions without us being aware of it.

Unconscious bias is a cognitive distortion that arises, for example, due to stereotypes. These mostly unconscious errors of perception lead us to assign certain (possibly false) characteristics, qualities and abilities to certain social groups on the basis of incomplete knowledge.

These biases have an impact on the (automated) decisions – also called algorithmic bias – which are associated with machine learning or deep learning. Classic machine learning works with data and predefined classifications. Here is a simple example: We have apples and pears and we tell the machine what the differences are between the types of fruit (for example, apples are red and pears are green = classification). When the machine now receives new data, it can use the classification to determine whether it is an apple or a pear.

But what if this does not concern apples and pears, but a person XY. And what if the classification concerns whether person XY, who comes from district YZ , receives a loan or not, because someone has previously defined the probability that a person from district YZ may not be able to pay off a loan? Here we are operating within the framework of ethics, where humans have even more influence.

In deep learning, the machine figures out the classification itself. To do this, it uses an artificial neural network of simulated nerve cells that learns from a large data set. An example of this would be facial recognition from the field of machine vision. Here, the amount of data and quality of the data are crucial. A classic example would be that image data sets do not include enough people of colour, which would make people of colour less recognisable by facial recognition than people who are not of colour . Here, humans have less influence, because the algorithm is ‘unsupervised’.

In both cases, however, there are measures for establishing correct AI ethics or letting the AI make better ethical decisions.

In his book ‘Human Compatible: AI and the Problem of Control’, the author Stuart J Russell, Professor of Computer Science at the University of California, Berkeley, United States, gives a fitting example of how far-reaching the ethical aspect of AI is. In doing so, he mentions the selection and recommendation algorithms used in social media: These are not particularly intelligent and yet they influence billions of people. Deepfakes are a recent example. This is media content (videos, audio files or photos) that looks authentic but has been modified with the help of AI or deep learning. Deepfakes are particularly unethical because they deliberately manipulate people.

Measures related to AI ethics

There are different measures that can be taken to create an ethical AI application that is right for your company. It all starts with the data that is used for the machine learning algorithm. The data is always divided into two data sets. The training data set is used to create the AI model that is able to make an (automated) decision based on the algorithm and newly collected data. To stay with the simple example mentioned above, the decision would be made here to classify the fruit as an apple or a pear based on its colour property. The validation data set is used to now test the AI model for its ‘accuracy’ (did the system really classify red fruit as an apple?). This is where the human/data scientist comes into play. The data scientist checks the result and looks to see whether the AI model needs to be adjusted – for example, the validation data set included fruit that could not be assigned to either of the two properties red or green.

The data scientist can now consider adding more properties to increase the accuracy of the result, for example, an apple is round and a pear is oval. Within the world of AI, this process is called feature selection or feature engineering and also belongs indirectly to the field of AI ethics. In the case of fruit, no unethical decisions will be made here, however, if we convert the fruit back into the creditworthiness of person XY, then we need to be careful that the merged data and properties comply with ethical norms.

The following measures assist the employee and team with the decision-making process and so help to improve AI ethics:

- When developing an AI application, a mixed team of different genders, cultures, and so on, should always be assembled in order to evaluate a maximum of different decision-making options and thus counteract any unconscious bias.

- There are training courses on how to be made more aware of unconscious bias. For example, Microsoft has introduced this type of training as standard in the company and every new employee must take part in such a course.

- adesso has its own internal campaign – called adesso MIND – that attempts to communicate a sense of togetherness that is more mindful and caring. Acknowledging and appreciating fellow human beings and work colleagues leads to greater acceptance and less stereotyped thinking = less unconscious bias.

- Google, Microsoft and also the European Commission (as examples) have established and introduced AI ethics principles (EU: ‘Ethics guidelines for trustworthy AI’) to ensure action is taken on AI ethics. Data protection and security also play a major role in the principles.

- Data must always be constructed from an equal distribution of properties, that is, there must be as much data with property X as property Y available in the data set so as not to create any disadvantage.

With its New School of IT, adesso has defined and introduced further measures that I would like to introduce to you in more detail below.

adesso’s New School of IT – Rethinking IT

The concept of the New School of IT aims to strengthen the competences of IT operations and integrate them into the company organisation as a key position. This ensures that company processes can be made more solution-oriented, holistic and thus also more value-oriented with the company’s own IT. For this to work, companies should focus their attention on the following three areas of activity:

- Ambidextrous attitude: An organisational approach that combines stable IT operations and visionary business ideas via a new culture and new responsibilities.

- Cloud Native Thinking: Cloud computing is the basis for innovation through modern topics such as blockchain, IoT and AI. This should allow the potential of the cloud to be fully exploited.

- Data mindedness: Data is the basis for AI and for new innovative applications that secure competitive advantages. It is therefore crucial to handle data correctly.

Other elements of adesso’s data mindedness include the following measures that are part of AI ethics:

- Develop data strategies for different scenarios to evaluate all relevant options and thereby minimise the impact of biases, and so on.

- Build an AI strategy that firmly integrates data protection and AI ethics.

- Set up a product/service roadmap with a focus on data and AI.

Conclusion

AI offers great business potential for the future and enormous opportunities to drive forward the success of companies. Thanks to the many recent innovations in AI application development, for example, the low-code principle or the new possibilities offered in Microsoft Azure, AI applications can now be created more easily and are therefore more widely accessible. Business processes are increasingly automated and therefore much faster, which means that we can concentrate on the essential business values, including the value-based ones – those of AI ethics. And that is where we need to go to ensure that our focus is not only on quantitative values, but also on qualitative values.

Would you like to learn more about exciting topics from the world of adesso? Then check out our latest blog posts.