10. October 2023 By Lilian Do Khac

How to use R to integrate an interface into the Aleph Alpha Luminous API

Running large language models using a fancy, ready-to-use interface increases accessibility for many, even for people who do not know any programming languages. This also likely explains all the hype surrounding local fundamental models to a certain extent, though the underlying technology has been available since 2020 (think GPT-2). In my blog post, I will focus on the backend component in R and explain how R is used to manage interface integration into Aleph Alpha. Ultimately, the existing interfaces will need to be adapted and control and monitoring mechanisms (#AIGovernance) will have to be integrated in order to achieve the full and active integration of such a fundamental model. I will explain how to access the Aleph Alpha API using R (and with a little help from Python) in the blog post below.

API requirements for Aleph Alpha

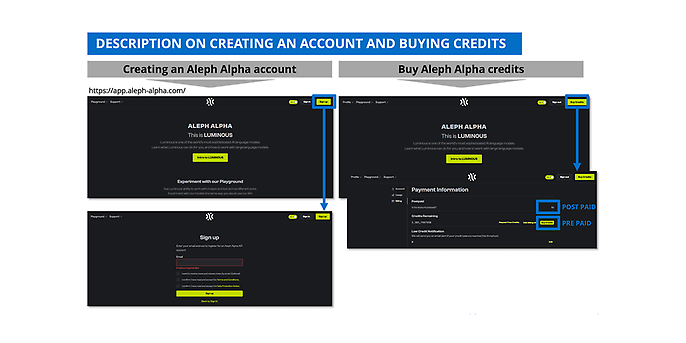

First off, we need an Aleph Alpha token to be able to access the API. You can get this token using your Aleph Alpha account. The screenshot below shows how to create an Aleph Alpha account and add credit to it. By the way, if you are interested in Aleph Alpha, I recommend the article ‘Quickstart with a European Large Language Model: Aleph Alpha’s “Luminous”’ from my colleague Marc Mezger.

Figure 1: How to create an Aleph Alpha account; source: https://app.aleph-alpha.com/

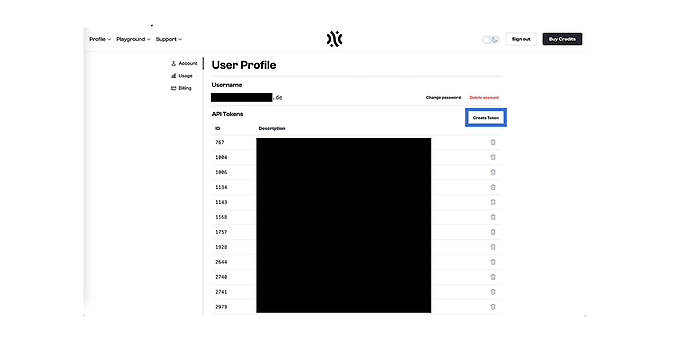

Once you have set up your account, go to ‘Buy Credits’ (image 01, top right) > ‘Account’ (left of ‘User Profile’) (image 02, highlighted in blue on the right) to generate a token.

Figure 2: How to generate an Aleph Alpha token; source: https://app.aleph-alpha.com/

Aleph Alpha API parameters

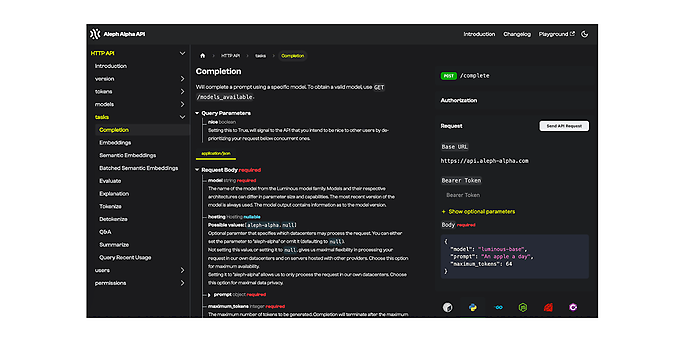

Before we continue, I would like to take a brief look at the key configuration parameters that you should know for Luminous. The matching documentation is available on the Aleph Alpha homepage. Some parameters are mandatory and must be specified, while others are optional. You can learn about the different tasks at Aleph Alpha. One task, for example, involves embedding the text in the mathematical format that language models can work with, in other words a text input.

The most important endpoint is Completion, which is used to design your summarisation, evaluation or Q&A instructions, followed by Embedding and Explain. You do not need any other endpoints to implement your ideas.

Figure 3: Aleph Alpha API documentation; source: https://docs.aleph-alpha.com/api/complete/

The following table describes the key parameters:

| Parameter name | Mandatory/optional | Description |

| model (string) | Mandatory | The Luminous model family contains different members. |

| prompt (object) | Mandatory | A prompt is a natural language instruction used to interact with a language model (we will explore this topic in greater detail later on in this post). The maximum context length for Luminous is 2,048 tokens (corresponds to approx. 2.5 A4 pages). This means that your input and output taken together should not exceed around 2,048 tokens. |

| maximum_token (integer) | Mandatory | Specifies the maximum number of tokens that are to be generated. Beyond that number, the output is aborted. |

| temperature (number) | Optional | The temperature can be used to influence the creativity of the model output. In more scientific terms, this variable governs the ‘random probability’ of the output. A temperature = 0 allows for less randomness or forces the model to be deterministic. This is very important for document classification or named entity recognition applications. A high temperature allows for greater randomness, i.e., output candidates with a lower probability of occurrence also get a ‘chance’. This is very useful when generating new texts. |

| top_k (integer) | Optional | In one sense, top_ k does the same thing that temperature does. As in the latter case, a higher value would prompt the model output to act more randomly. This means that if, for example, k = 3, one of the three most likely candidates would be chosen at random, not the most likely one. |

| top_p (number) | Optional |

In one sense, top_p does the same thing that top_k does, whereby the value p is not represented as an integer but as a constant. A set of best candidates is selected whose sum results in a probability greater than the set value top_p. Temperature, top_k and top_p do not have to be used simultaneously. |

| presence_penalty (number) | Optional | This reduces the probability that a token that was already generated will be generated again. There is no dependency on existing tokens. Requires that repetition_penalties_include_prompt = true. |

| frequency_penalty (number) | Optional | This parameter serves a similar function as presence_penalty. Here, however, there is a dependency on the number of pre-existing tokens. Requires that repetition_penalties_include_prompt = true. |

| sequence_penalty (number) | Optional | A higher value reduces the likelihood that a token already contained in the prompt will be generated. Requires that repetition_penalties_include_prompt = true. |

| repetition_penalties_include_prompt (boolean) | Optional | Precondition that takes the prompt into account for the three parameters described above. This is important especially if you do not wish the model output to be repeated. |

| repetition_penalties_include_completion (boolean) | Optional | Precondition that is taken into account in the output for the three parameters explained above. This is important especially if you do not wish the model output to be repeated. |

| best_of (integer) | Optional | The best n output candidates are output. |

| stop_sequence (string) | Optional | The best n output candidates are output. |

| target (string) | Mandatory (for ‘Explain’) | This is the output result that is to be described. This input is only relevant for ‘Explain’. |

| prompt_granularity (object) | Mandatory (for ‘Explain’) | It is possible to select the granularity of the descriptions. Options available: Token, Word, Sentence, Paragraph and Custom. This input is only relevant for ‘Explain’. |

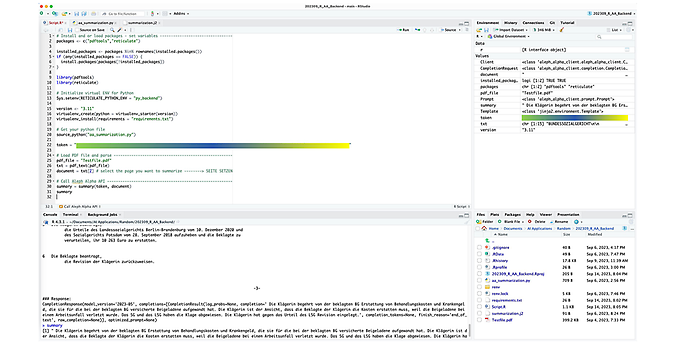

R preconditions

In order to access the Aleph Alpha API using R, you need the libraries shown in lines nine to ten in Figure 4. You will also require PDFtools to parse PDF documents (lines 27 to 28) and Reticulate to work with Python. Other Python packages you will need are shown in lines 16 to 19. We will be taking a closer look at the Python and Jinja file in the next section. In Figure 4, I have uploaded a (publicly available) court ruling as an example. My goal here is to have a short summary of a specific page generated (by the system). You can find the summary that was produced in the console or in Figure 5.

Figure 4: R script

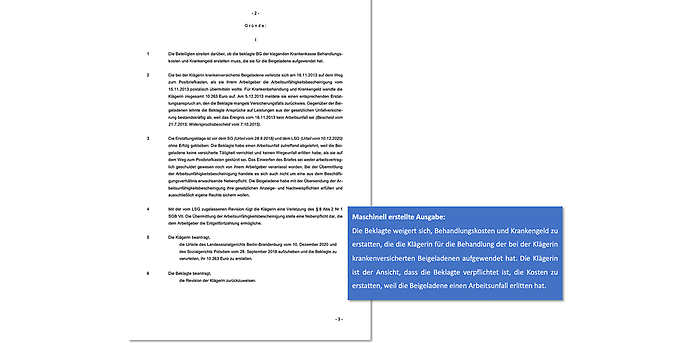

Figure 5: The machine-generated summary produced using Luminous, https://www.sozialgerichtsbarkeit.de/node/174141

How to access the Aleph Alpha API using R

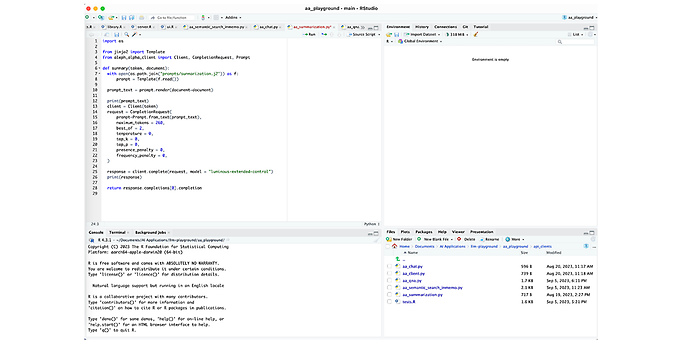

In Figure 4 (lines 22 and 33 to 34), we use R to access the Aleph Alpha API; unfortunately, only Python or RUST (or other) clients are available for this task. This is why we run Python via Reticulate and have created a Python file (see Figure 6) for this purpose. In this file, the parameters transferred from R (the token and parsed PDF page) are forwarded to Aleph Alpha, with the result then being fed back into R. From line 14 you can see some of the parameters described above. For the summary, I used luminous-extended-control and limited it to a maximum of 260 output tokens. We also see a Jinja file obtained in line 7 (see Figure 6). I have stored the prompt for the summary in this file.

Figure 6: Python File

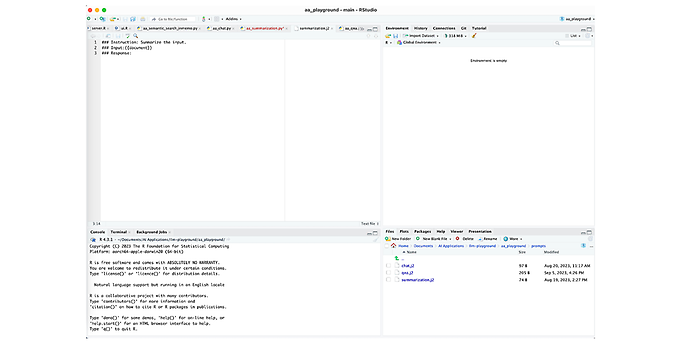

Figure 7 shows everything you need to set up the prompt and access the Aleph Alpha instruction models. There is an instruction (in this case, a request to summarise the input), an input (the parsed PDF page) and a response (the output). It is also possible to code directly in Python or R code. A Jinja file is a more elegant and convenient way to share or audit at a later time.

Abbildung 7: Jinja File

Outlook

I hope I was able to demonstrate how great it can be to use R in combination with these new technologies. It is even better with a connection to R Shiny, which also allows you to flexibly and quickly create R+R Shiny mock-ups with Aleph Alpha Luminous (or other basic models such as OpenAI or Cohere), where these would likely just be paper sketches in other situations. This way, ideas can be put to the test in a visually appealing way and discussed with other participants. In addition, any pre-processing steps that may be needed can be identified early on and incorporated into an implementation.

Would you like to learn more about exciting topics from the adesso world? Then take a look at our blog posts that have appeared so far.

Also interessting