3. August 2021 By Marcus Peters

Cloud native, multi cloud or rather cloud agnostic?

The motivation for IT managers to run applications, or rather ‘workloads’, in the cloud is hotly debated time and again. Positive aspects that are often mentioned include eliminating the management of infrastructure components, the possibility of scaling under load or the reduction of operating costs. In the meantime, however, it has become clear that these arguments are not generally tenable and that a data centre in the cloud must be professionally controlled in order to deliver the desired benefits.

There is no doubt that cloud technologies are part of the digitalisation strategy for most companies, as the requirements for IT at many points can be implemented more flexibly and efficiently from the cloud than from the company’s own data centre. Of course, not across the board either, but taking into account security aspects and hybrid scenarios.

Often the first step into the cloud is taken by moving virtual machines into the cloud infrastructure. This procedure is also referred to as ‘lift and shift’. And this is where it starts to get interesting: If an organisation gets stuck in this phase, the enjoyment to be had with the cloud will be limited. Although managing the hardware is no longer necessary, all other elements from the operating system, runtime components and persistence mechanisms to the application itself, still have to be organised and maintained.

What was that about cloud native again?

The Cloud Native Computing Foundation defines cloud native technologies as follows:

‘Cloud native technologies empower organisations to build and run scalable applications in modern, dynamic environments such as public, private and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure and declarative APIs exemplify this approach.”

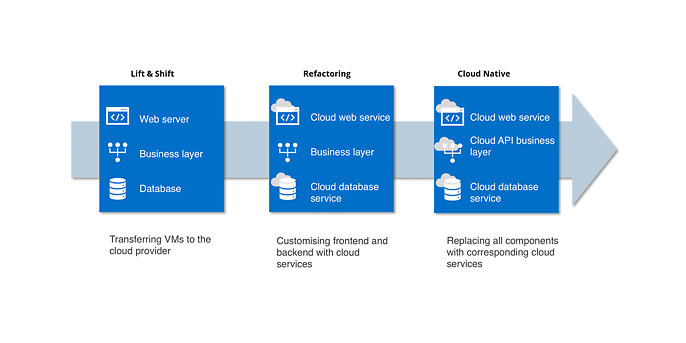

This means that an application is considered to be ‘native’ if it uses the mechanisms for abstraction of the operating system, runtime or persistence and therefore does not run in a virtual machine. In the case of a typical web application with a database for storing data, corresponding services of the cloud provider could replace the web server as well as the database. An example of a transfer to this state is shown in the following figure.

Example transformation of a web application to cloud native via ‘refactoring’.

The full performance of the cloud only unfolds when the services of the cloud provider are used.

A typical criticism of such an approach is that it is tied to a cloud provider. As soon as an application is natively integrated into the provider’s environment, a dependency on this provider arises along with the much-cited ‘vendor lock-in’.

However, it is worth looking at this point in more detail: The closer a cloud application is ‘bound’ to the underlying layers of the provider, in other words, native access to the services take place, the better the application runs at and with this provider. The ‘computer under the desk’ serves as an analogy here. An application that has been optimised for a selected operating system has the full performance of the system at its disposal. However, because of the high level of performance, the application is less portable and not as easily transferred to another operating system.

To work around this – as with software that can run on different operating systems – multi-cloud approaches are often used.

And what does multi cloud do?

Multi cloud means, as the name suggests, a decision is made to use hyperscalers from different manufacturers. In most cases, two scenarios are covered: Either a company chooses a different cloud provider for each workload, depending on the situation. Or, a workload is distributed across different cloud providers via a cloud services abstraction.

The first case is quite simple and follows typical strategies to diversify the supplier pool. These days, hardly any company has all its software from one manufacturer, and that is a good thing. When selecting cloud services, it can also make sense to select individual services on the basis of a ‘best-of-breed’ approach in addition to a platform strategy in order to save costs directly or maximise profits.

However, this means that the individual application will still run on exactly one hyperscaler. And what was said above about pushing the limits of performance via cloud-native approaches then applies for this again.

Cloud agnostic – running one application simultaneously on different clouds

If you want to completely decouple yourself from a cloud provider, there is no option other than to follow a cloud-agnostic approach. This means that the application runs simultaneously on different hyperscalers or can be distributed in the typical redundancy scenarios from cold, warm and hot standby.

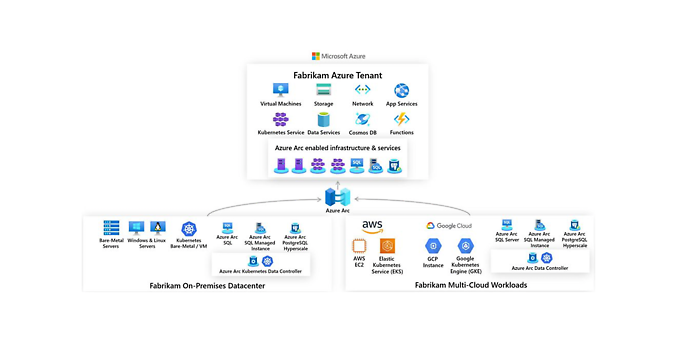

However, this degree of freedom makes things complex and is not to be underestimated. An obvious approach is to distribute via containers. These are optimised for the respective cloud environment and corresponding components are available from many manufacturers. In addition to the ‘Red Hat OpenShift’ solution chosen by Forrester as the market leader in the ‘Forrester Wave’ h, Microsoft, Google and AWS also provide suitable mechanisms. The following figure shows an example of architecture for managing a multi-cloud environment with Microsoft’s Azure Arc.

Multi cloud with Azure Arc, source: Microsoft.com

However, this approach and the motivation behind it need to be considered in detail. Not every application is generally suitable for every container scenario of all cloud providers. Differences in the underlying computing or network infrastructure, as well as the proximity to dependent services, can lead to a significantly different level of performance. Moving an application between cloud environments might also make it necessary to move data. Besides economic parameters, environments often differ in the services and facilities they provide to store and manage this data.

Another approach for cloud agnostic in addition to distributing via containers is to incorporate an abstraction layer that summarises the communication and behaviour of the cloud providers. This is shown in the following figure.

Multi-cloud scenario with an abstraction mechanism

This approach makes it possible to operate the application in a distributed manner via an abstraction layer with subsequent native coupling to the services of the cloud providers. However, the greatest amount of effort is required to achieve this and, as when distributing via containers, there is no guarantee that every last ounce of performance will be squeezed from all clouds.

Conclusion

A multi-cloud strategy can – if well considered – be a sensible alternative to ‘everything from a single source’. However, it is important to ask what exactly is to be achieved and whether it is really necessary for an application to run in a cloud-agnostic manner on different clouds. or whether it would not be easier to define a home in the cloud for one application at a time. In the latter case, it then makes sense to consider cloud-native approaches for the respective applications in order to exploit the full potential.

Would you like to learn more about exciting topics from the world of adesso? Then check out our latest blog posts.